Hpc

Estimating Job Resources

4 min read • 685 wordsWhen trying to estimate how many resources to submit for you can use the interactive srun command.

To launch a 5 core interactive job run:

[john@andes8 ~]$ srun --cpus-per-task=5 --pty /bin/bashTo get started, please copy a small zip folder containing some python code and a sample submit script.

cp /dartfs-hpc/admin/Class_Examples.zip . && unzip Class_Examples.zipThe above command will copy the .zip file called Class_Examples from the location /dartfs-hpc/admin. The . instructs the copy to your current working directory. The && instructs to run the next command, which is to unzip the contents of the folder into the directory you are in.

When estimating your resource utilization you can use a program like top to monitor current utilization.

In this tutorial lets open two terminals side by side. In one terminal we will launch our python code from the folder we unzipped. In the other terminal we will run the command top -u to look at resource utilization.

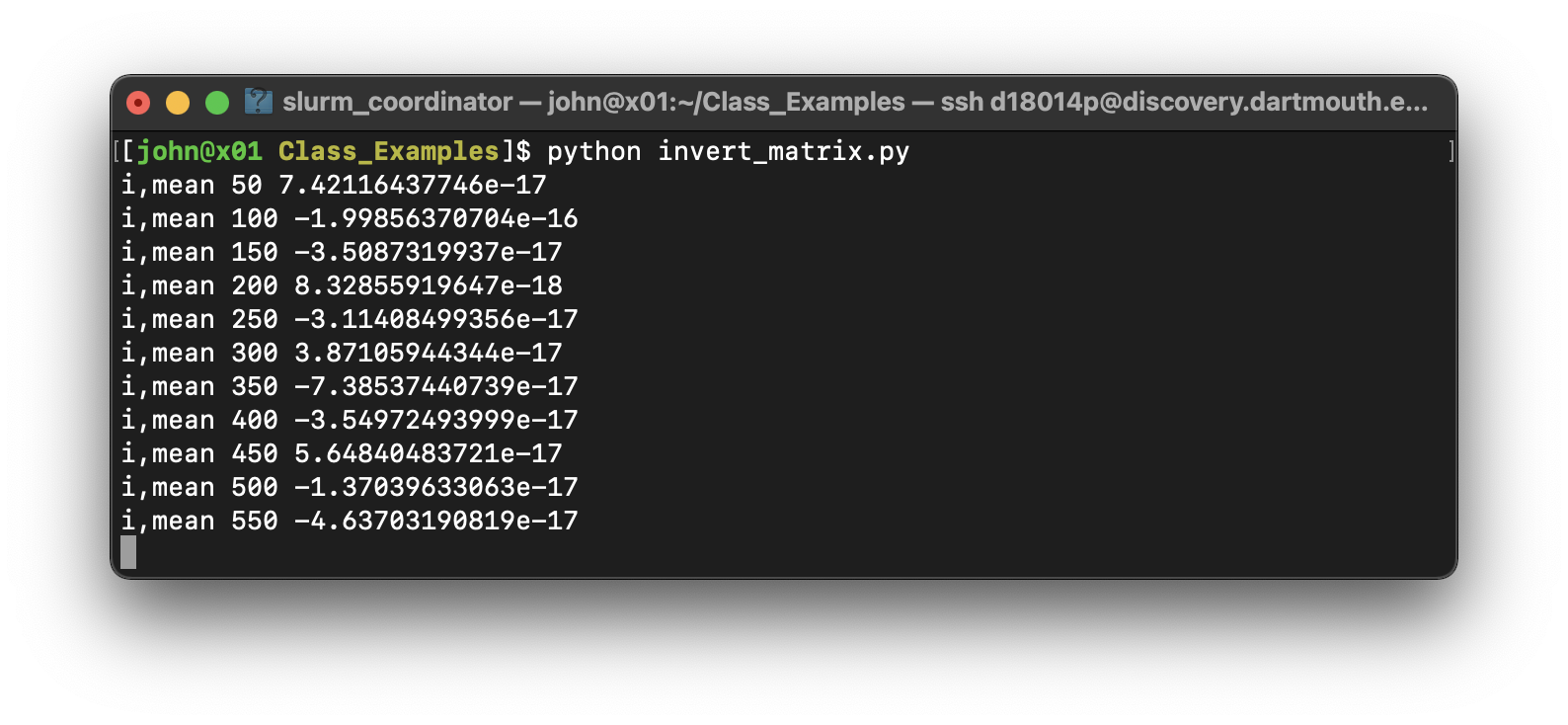

Inside the Class_Examples folder is basic python script we will use for estimating resources. The script is called invert_matrix.py. Lets run the script to see what it does.

cd Class_Examples

python3 invert_matrix.py

Once your python command is executing like the above image, use the second terminal you opened to run the top command. My username is john so my command will be:

top -u johnYou can get out of top by simply hitting the letter q.

The next top screen will display information about the state of the system but also information like the number of CPUs, amount of system memory, and other useful information. In this case we are looking at two fields in particular. CPU% and RES short for reserved memory.

From the above the CPU% column is showing 97-99%. That is equivalent to 1 CPUs. With this information I know to submit my job for 1 CPU in order for it to run efficiently.

In the other col RES we can see that we are not using quite a full GB of memory. We know from this output that requesting the minimum for a job of 8GB will be sufficient for our job. (or lower)

The next resource you should consider estimating before subming your job is walltime walltime is used to determine how long your job will run for. Estimating accurate walltime is good scheduler ettiquite. From the command line lets run our python code, but add the time command at the beginning.

time python3 invert_matrix.py

From the output above you will want to look at the real field. This is the time passed between pressing the enter key and the termination of the program. At this point, we know that we should submit for at least 5 minutes of walltime. That should allow enough time for the job to run to completion.

Note

Determining walltime can be tricky. To avoid potential job loss it is suggested to add 15-20% more walltime than jobs typically need. This will ensure jobs have enough walltime to complete the task. So if your job takes 8 minutes to complete, submit for 10.

Before we move to the next portion of submitting the job via sbatch, lets adjust the script to use 5 cores instead of 1.

So that you do not have to open an editor an updated version of the script is in the folder. Go ahead and take a look at the file invert_matrix_5_threads.py. Notice at the top it is set to:

#!/usr/bin/python3

import os

# Set the number of threads to 5 to limit CPU usage to 5 cores

os.environ["OPENBLAS_NUM_THREADS"] = "5" # For systems using OpenBLAS

# Now import NumPy after setting environment variables

import numpy as np

import sys

# Main computation loop

for i in range(2, 1501):

x = np.random.rand(i, i)

y = np.linalg.inv(x)

z = np.dot(x, y)

e = np.eye(i)

r = z - e

m = r.mean()

if i % 50 == 0:

print("i,mean", i, m)

sys.stdout.flush()Notice the line at the top it is set to:

os.environ["OPENBLAS_NUM_THREADS"] = "5"Lets go ahead an run that script now to see if adding 5 cores speeds it up:

python3 invert_matrix_5_threads.pyWas it faster?